VidTwin Paper Explained

[Daily Paper Review: 06-01-25] VidTwin: Video VAE with Decoupled Structure and Dynamics

Introduction

Diffusion models have emerged as the leading approach for image generation, with autoencoders like VAEs playing a crucial role in converting images to latent representations. Recently, there has been increasing interest in adapting this paradigm to video generation. However, due to the additional complexity of modeling temporal consistency in videos, capturing both visual content and temporal dependencies within a latent space is a challenging task. VidTwin proposes a novel solution to address these challenges.

Problems with Existing Methods:

Uniform Representation (First Design Philosophy):

Existing methods often represent each frame (or a group of frames) as latent vectors or tokens of uniform size.

Problem:

This approach overlooks the inherent redundancy in video data, as adjacent frames typically share significant overlap in content.

It leads to inefficiencies in compression since similar information is redundantly encoded across frames.

Decoupled Content and Motion Representation (Second Design Philosophy):

These methods separate video representations into content (static parts) and motion (dynamic changes).

Problem:

They oversimplify the relationship between content and motion, failing to model complex and fine-grained interactions between the two.

This results in unsatisfactory generation outcomes, such as blurred or unrealistic frames, and poor reconstruction quality due to the inability to handle rapid or subtle motions effectively.

VidTwin's Solution:

VidTwin proposes a novel approach by introducing two distinct latent spaces:

Structure Latent:

Encodes the global content and movement in the video, such as static objects (e.g., the table and screw) and overall semantic structure.

This captures the broad, slower-changing elements of the video.

Dynamics Latent:

Encodes fine-grained details and rapid motions, such as texture, color, and intricate movements (e.g., the screw's rotation and downward motion).

This latent space focuses on capturing dynamic, fast-changing components.

Key Advantages of VidTwin:

Comprehensive Modeling of Video Dynamics:

By learning interdependent latent representations (Structure + Dynamics), VidTwin captures both global and detailed aspects of the video.

This ensures no loss of fine-grained details, addressing the shortcomings of previous decoupling methods.

High Compression Efficiency:

VidTwin leverages the redundancy in video frames by encoding only essential structure and dynamics separately, leading to better compression rates.

Improved Reconstruction Quality:

Unlike previous methods, VidTwin reconstructs videos with higher fidelity, maintaining sharpness, natural motion, and temporal coherence.

Interdependency Awareness:

The model understands and exploits the relationship between structure and dynamics, leading to a more accurate representation of complex video content.

The entire pipeline for generating videos can be summarized in three main stages:

Goal of Structure Latent Extraction:

The Structure Latent aims to encode the low-frequency temporal motion trends and global semantic information from a video while removing redundant details and downsampling the spatial dimensions.

Goal of Dynamics Latent Extraction

Extract local, rapid motion details (high-frequency temporal information) from the video latent z produced by the encoder E(x). Maintain spatial consistency and compactness while effectively reducing the spatial dimensionality of the latent representation.

Why Not Use a Spatial Q-Former?

A spatial Q-Former could be used to select the most relevant spatial locations. However, it disrupts spatial consistency, meaning the spatial relationships between pixels (or patches) across frames are lost, which degrades performance.

Instead, the authors propose a carefully designed spatial aggregation approach.

Overview of Decoding Latents to Video

Step 3: Refinement Layers

After initial upsampling, the model uses refinement layers to improve the decoded frames. These layers ensure the generated video has realistic textures, motion coherence, and high perceptual quality.

Typical refinement layers include:

Residual convolutional blocks.

Attention mechanisms (to enforce temporal and spatial consistency).

Loss Functions in VidTwin

VidTwin uses a combination of loss functions to optimize the training of the video generation pipeline. These losses ensure the reconstruction of high-quality videos, enforce smoothness in the latent space, and provide meaningful conditioning for the integration with generative models like diffusion models. Here's the detailed breakdown:

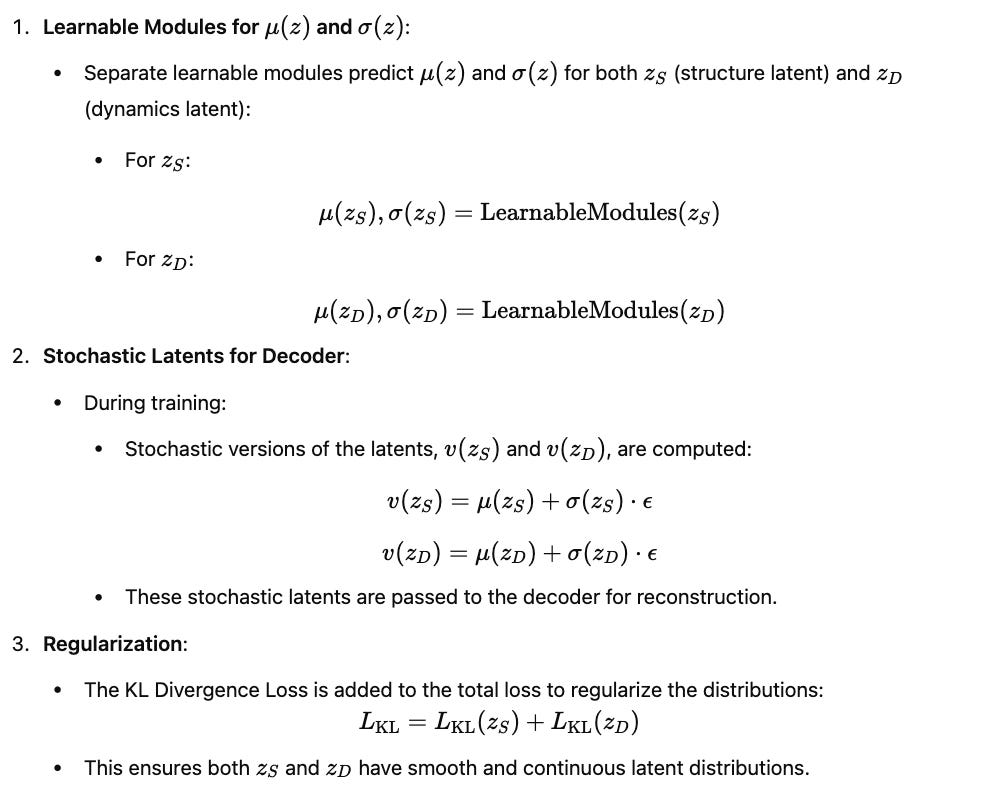

4. KL Divergence Loss

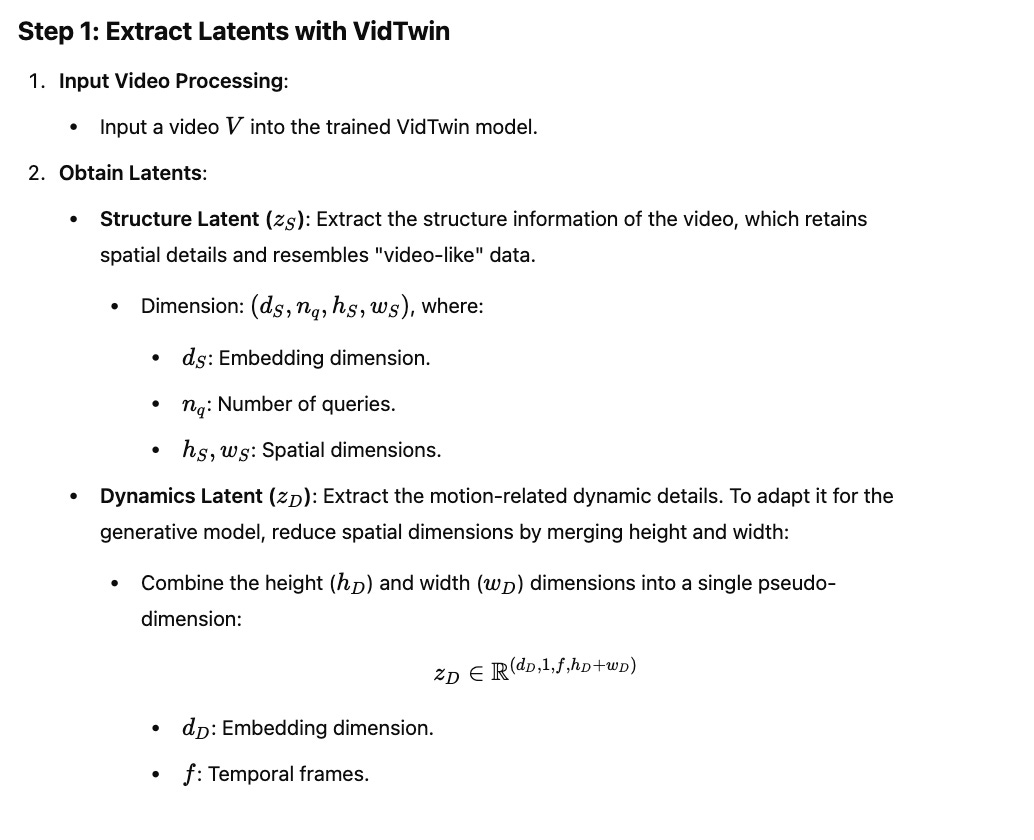

Steps to Integrate VidTwin with a Generative Model

VidTwin is designed to work seamlessly with generative models, such as diffusion models, for conditional video generation. Below are the detailed steps to integrate VidTwin into the generative pipeline:

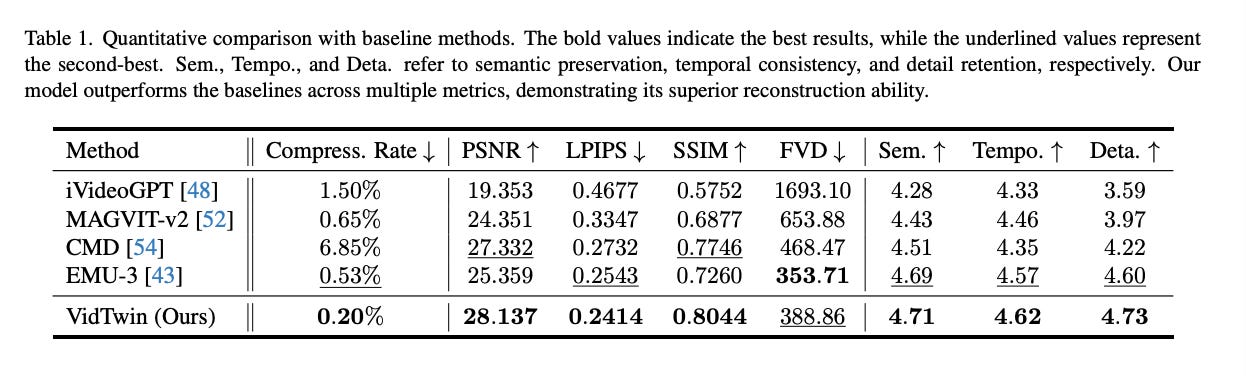

Dataset Details:

Training Dataset: A large-scale, self-collected video-text dataset with 10 million video-text pairs, covering a broad variety of content and motion speeds.

Evaluation Datasets:

MCL-JCV Dataset: Used for evaluating video compression quality.

UCF-101 Dataset: Used to verify the adaptability of the model's latent space for class-conditioned video generation. It contains 101 different classes of motion videos.

Training Configuration:

Video Clips:

8 fps, 16-frame, 224×224 video clips for training.

25 fps, 16-frame, 224×224 video clips for evaluation.

Model Backbone:

Spatial-Temporal Transformer with a hidden dimension of 768 and a patch size of 16.

Both the encoder and decoder consist of 16 layers with 12 attention heads.

Metrics for Evaluation:

Standard Reconstruction Metrics:

PSNR (Peak Signal-to-Noise Ratio)

SSIM (Structural Similarity Index)

LPIPS (Learned Perceptual Image Patch Similarity)

FVD (Frechet Video Distance)

Human Evaluation Metrics:

Mean Opinion Score (MOS): Based on ratings from 15 professional evaluators across three criteria:

Semantic Preservation (Sem.)

Temporal Consistency (Tempo.)

Detail Retention (Deta.)

Compression Rate (Compress. Rate): Defined as the ratio between the dimension of the latent space (or token embeddings) used in the downstream generative model and the input video’s dimension.