Self-adaptive Large Language Models (LLMs) are an emerging area of research aiming to enhance the flexibility and efficiency of LLMs in dynamic and diverse task scenarios. These models dynamically adjust their behavior during inference without the need for retraining, allowing them to respond effectively to unseen tasks or changing contexts.

The primary goal is to overcome the limitations of static, fine-tuned LLMs that require extensive computational resources and are inflexible in handling new tasks. Self-adaptation is inspired by principles in neuroscience and computational biology, where specialized modules or regions activate dynamically based on specific requirements. This modularity enables efficient task handling, continual learning, and scalability, making it a promising direction for building robust AI systems.

Key concepts in the field include:

Modularity and Task-Specific Experts: Developing specialized modules (e.g., expert vectors) for different tasks, which can be dynamically composed based on the task demands.

Efficient Parameter Usage: Employing parameter-efficient fine-tuning (PEFT) methods like Low-Rank Adaptation (LoRA) to reduce the computational and memory footprint.

Dynamic Adaptation During Inference: Using mechanisms like Mixture of Experts (MoE) systems to activate specific modules during inference rather than relying on static configurations.

While significant progress has been made, challenges such as scalability, overfitting in expert modules, and effective task-specific composition remain largely unresolved.

The Problem with Current Approaches

Traditional LLM training and fine-tuning approaches face several limitations in achieving self-adaptive behavior:

Computational Inefficiency: Fine-tuning LLMs for each new task requires retraining or updating a large number of parameters, which is resource-intensive.

Static Behavior: Fine-tuned models are static and cannot adapt to unseen tasks during inference.

Overfitting: Narrow-task fine-tuning often leads to overfitting, especially when training on small datasets.

Scalability Issues: The number of parameters required for expert modules in methods like MoE can grow significantly, leading to increased storage and computational demands.

Task Interference: Composing multiple task-specific modules can lead to conflicts or degraded performance, making it challenging to handle diverse tasks effectively.

Transformer2: Key Points and How It Solves the Problem

Transformer2 introduces a novel framework for self-adaptive LLMs, addressing the limitations of traditional approaches through the following innovations:

Singular Value Fine-tuning (SVF):

SVF focuses on fine-tuning only the singular values of weight matrices in the LLM.

By adjusting these components, Transformer2 reduces computational demands and mitigates overfitting while preserving the expressive power of the model.

Expert vectors trained with reinforcement learning (RL) on narrow datasets become compact and inherently compositional.

Two-Pass Inference Mechanism:

First Pass: Identifies the properties of the incoming task through a dispatch system.

Second Pass: Dynamically combines task-specific expert vectors to modify the base model’s weights in real time, tailoring its behavior to the task at hand.

Dynamic Task-Specific Adaptation:

During inference, Transformer2 selectively activates and combines pre-trained expert modules based on the task requirements.

This modularity allows the system to adapt to diverse tasks, even those not seen during training, without retraining the base model.

Reinforcement Learning for Expert Training:

Experts are fine-tuned using RL, optimizing for task performance and ensuring domain-specific specialization.

This approach enhances the adaptability and efficiency of the system.

Scalable and Versatile Design:

Transformer2 supports various LLM architectures and modalities, including vision-language tasks.

Expert vectors can be reused across different models, further reducing storage and training cos

Differences Between MoE Systems and Transformer2

1. Routing Mechanism:

MoE Systems:

Use token-level routing strategies, where each token in the input sequence is dynamically routed to a subset of expert modules (e.g., MLPs) that are activated during inference.

The routing decisions are made per token, allowing for fine-grained specialization but introducing overhead due to token-level granularity.

Transformer2:

Employs a sample-level module selection strategy, where the adaptation happens for the entire input sample (e.g., a sequence or task).

This coarser-grained routing reduces complexity and makes the model more efficient while still achieving task-specific adaptation.

2. Expert Module Construction:

MoE Systems:

Expert modules are typically trained from scratch or based on pre-trained dense models (e.g., upcycled models).

No auxiliary loss is explicitly designed to ensure that each expert specializes in a specific domain. This can lead to suboptimal or overlapping specializations among experts.

Transformer2:

Expert vectors are trained using reinforcement learning (RL) with explicit objectives to acquire domain-specific knowledge.

This auxiliary loss ensures that the experts develop true specialization and compositionality, improving their effectiveness and reducing interference between modules.

3. Sparsity Mechanism:

MoE Systems:

Use sparsely activated experts, where only a small subset of experts is selected and activated per token to reduce computational costs.

This sparsity mechanism optimizes inference time by minimizing the number of active parameters for each token.

Transformer2:

Achieves efficiency through a compact expert vector mechanism, where only a small, lightweight vector is used to modulate the base model's parameters.

This approach avoids the overhead of routing large subsets of the model, making the system both computationally and storage-efficient.

4. Adaptation Granularity and Flexibility:

MoE Systems:

Token-level routing provides fine-grained control, but it is computationally expensive and less suited for scenarios requiring sample-level adaptation.

These systems are less flexible for real-time adaptation to unseen tasks or domains.

Transformer2:

The sample-level module selection strategy is more efficient and allows the model to dynamically adapt to the requirements of entire tasks or input samples, making it better suited for real-world applications involving diverse and unseen tasks.

5. Modular Reusability:

MoE Systems:

Experts are often tightly coupled to the original training setup and may not generalize well across different tasks or models.

Transformer2:

Expert vectors are designed to be compact and compositional, enabling their reuse across different models and tasks.

This modular design enhances scalability and reduces the need for redundant training.

Transformer2 Overview

Transformer2 is an architecture built upon pre-trained large language models (LLMs). It uses Singular Value Fine-tuning (SVF) to modify the weight matrices of the model in a highly efficient and structured way, allowing adaptation to new tasks with minimal additional parameters.

Key Steps:

Singular Value Fine-tuning (SVF): Learn a compact set of expert vectors zzz to scale the singular values of pre-trained weight matrices.

Adaptive Combination of Experts: Use three strategies (prompt-based, classifier-based, or mixture-based) to adaptively combine these learned expert vectors during inference.

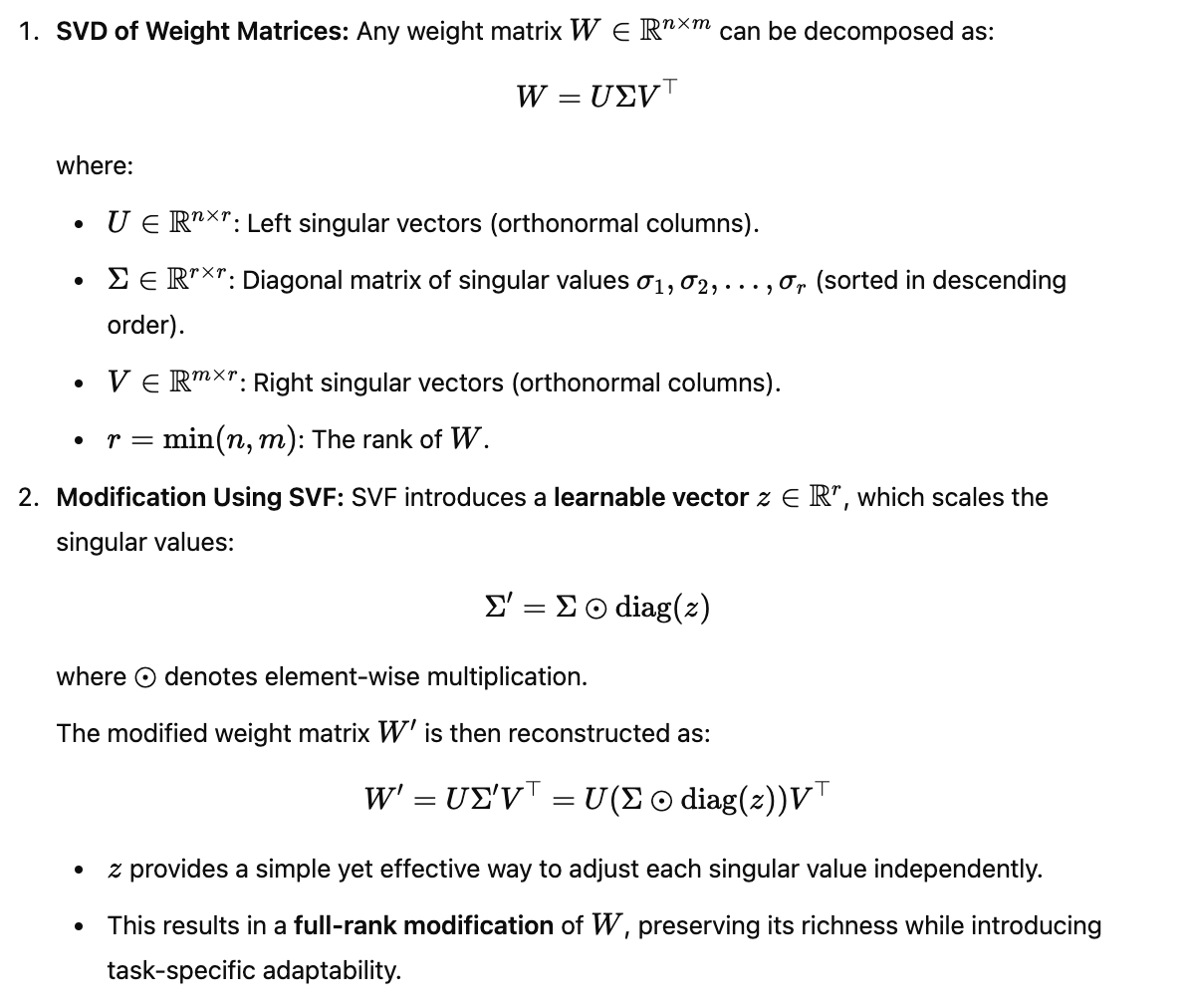

Singular Value Fine-Tuning (SVF)

SVF fine-tunes pre-trained models by modifying the singular values of the weight matrices W in each layer. Instead of directly updating W, SVF adjusts a set of compact learnable vectors z that scale the singular values in W.

Why Singular Values? Singular values encode the "strength" or importance of individual components of a weight matrix. By modifying them, SVF allows targeted changes to the behavior of the model while preserving the structural knowledge already present in W.

Optimization of SVF with Reinforcement Learning

Instead of relying on standard supervised objectives (e.g., next-token prediction), SVF uses Reinforcement Learning (RL) to directly optimize task performance.

This allows for fine-tuning on tasks where standard supervision is difficult (e.g., tasks with sparse or binary rewards).

The passage describes self-adaptation within the framework of Transformer2, focusing on test-time adaptation for Large Language Models (LLMs). It uses Singular Value Fine-tuning (SVF) to create "expert" vectors and applies various self-adaptive strategies to dynamically improve performance on diverse tasks. Let's break this down step by step, including the mathematics, methodologies, and technical details.

Overview: Self-Adaptation in Transformer2

Objective: Equip LLMs with dynamic test-time adaptation using expert vectors (z1,z2,…,zK) trained via SVF for specific capabilities (e.g., coding, math).

Two-Pass Adaptation:

First Pass: The model observes the input and generates an adaptation vector z′ tailored to the task based on the test-time behavior.

Second Pass: The model uses z′ to modify its weights for the final response.

Role of SVF: The pre-trained "expert vectors" provide modular capabilities (e.g., coding, reasoning). The self-adaptive strategies determine the most appropriate combination of these vectors for a given task.

Adaptation Strategies

CEM for Few-Shot Adaptation

Summary of Experiments and Results:

Goals of Experiments:

Assess the efficiency and effectiveness of SVF (Sparse Vector Fine-tuning).

Demonstrate self-adaptiveness using the three adaptation strategies (Prompt, Cls-Expert, and Few-shot).

Conduct in-depth analysis and ablation studies to understand the framework's properties.

Experimental Setup:

Pre-trained LLMs Evaluated: LLAMA3-8B-INSTRUCT, MISTRAL-7B-INSTRUCT-V0.3, LLAMA3-70B-INSTRUCT.

Tasks Evaluated:

Training: GSM8K (math), MBPP-Pro (coding), ARC-Easy (reasoning).

Testing: MATH, Humaneval, ARC-Challenge (reasoning), and OKVQA (vision-language).

SVF-trained z-vectors were fine-tuned for task-specific performance and evaluated across pure-language and vision-language tasks.

Key Findings:

SVF Performance:

Outperforms LoRA across most tasks and models.

Requires significantly fewer training resources (less than 10% of LoRA’s parameters).

Consistently improves performance across tasks, including a 39% boost in vision-language (OKVQA) tasks.

Self-Adaptation Results:

Transformer2 shows significant improvements over LoRA for unseen tasks:

Few-shot self-adaptation consistently achieves the best results across tasks.

Transformer2 adapts effectively across domains, including vision-language tasks, using language-trained experts.

LoRA underperforms in some tasks (e.g., MATH, Humaneval) due to overfitting.

Efficiency and Scalability:

Two-pass inference is used for prompt adaptation but increases runtime. The second pass is proportional to token generation.

Transformer2 provides a framework for lifelong learning and continuous improvement, especially in few-shot settings.

Performance Highlights:

LLAMA3-8B-INSTRUCT: Few-shot adaptation improves performance by up to 4% on MATH, Humaneval, and ARC-Challenge compared to LoRA.

MISTRAL-7B-INSTRUCT: Few-shot adaptation achieves up to 9% improvement on Humaneval and ARC-Challenge.

Vision-Language Tasks: Transformer2 boosts LLAMA3-LLAVA-NEXT-8B performance on OKVQA, showcasing its flexibility for multimodal applications.

Efficiency Observations:

The first pass (self-adaptation) takes significantly less time than the second pass (problem-solving).

Efficiency can be improved by reducing input length or optimizing adaptation methods.

Conclusion:

Transformer2 with SVF demonstrates strong generalization, flexibility, and resource efficiency, outperforming LoRA in adapting to unseen tasks. Few-shot self-adaptation emerges as the most effective strategy, enabling continual performance improvements in diverse settings.