TA-TiTok Paper Explained

[Daily Paper Review: 20-01-25] Democratizing Text-to-Image Masked Generative Models with Compact Text-Aware One-Dimensional Tokens

Modern text-to-image generative models rely heavily on image tokenizers to represent images as latent tokens that can be paired with text prompts for generation. However, there are several challenges associated with existing approaches:

Complex Training Pipelines:

Traditional image tokenizers, like those based on 2D grids (e.g., VQGAN), struggle with the inherent redundancy in images (similarities between neighboring patches).

State-of-the-art 1D tokenizers, such as TiTok, rely on a two-stage training process, which is computationally expensive and difficult to scale to larger datasets.

Dependency on Proprietary Data:

Current high-performing text-to-image models are trained on large-scale private datasets. This makes them difficult to replicate and inaccessible to researchers without access to such datasets.

Suboptimal Semantic Alignment:

Existing tokenizers and models often focus on reconstructing low-level image details (like pixels or patches), which may lead to poor semantic alignment with the associated textual descriptions.

Computational Costs:

Many models use resource-heavy architectures (e.g., T5-XXL text encoders) and extensive training pipelines, making them less accessible for groups with limited computational resources.

What TA-TiTok and MaskGen Aim to Achieve

To address these issues, TA-TiTok (Text-Aware Transformer-based 1D Tokenizer) and MaskGen (Masked Generative Models) are proposed with the following goals:

Efficiency: Reduce training and inference costs while maintaining high-quality text-to-image generation.

Scalability: Streamline the tokenizer training process to support large-scale datasets without multi-stage complexity.

Accessibility: Use only publicly available datasets and open-source the model weights to democratize text-to-image generative models.

Enhanced Performance: Improve semantic alignment between text and images, allowing for more faithful and detailed image generation.

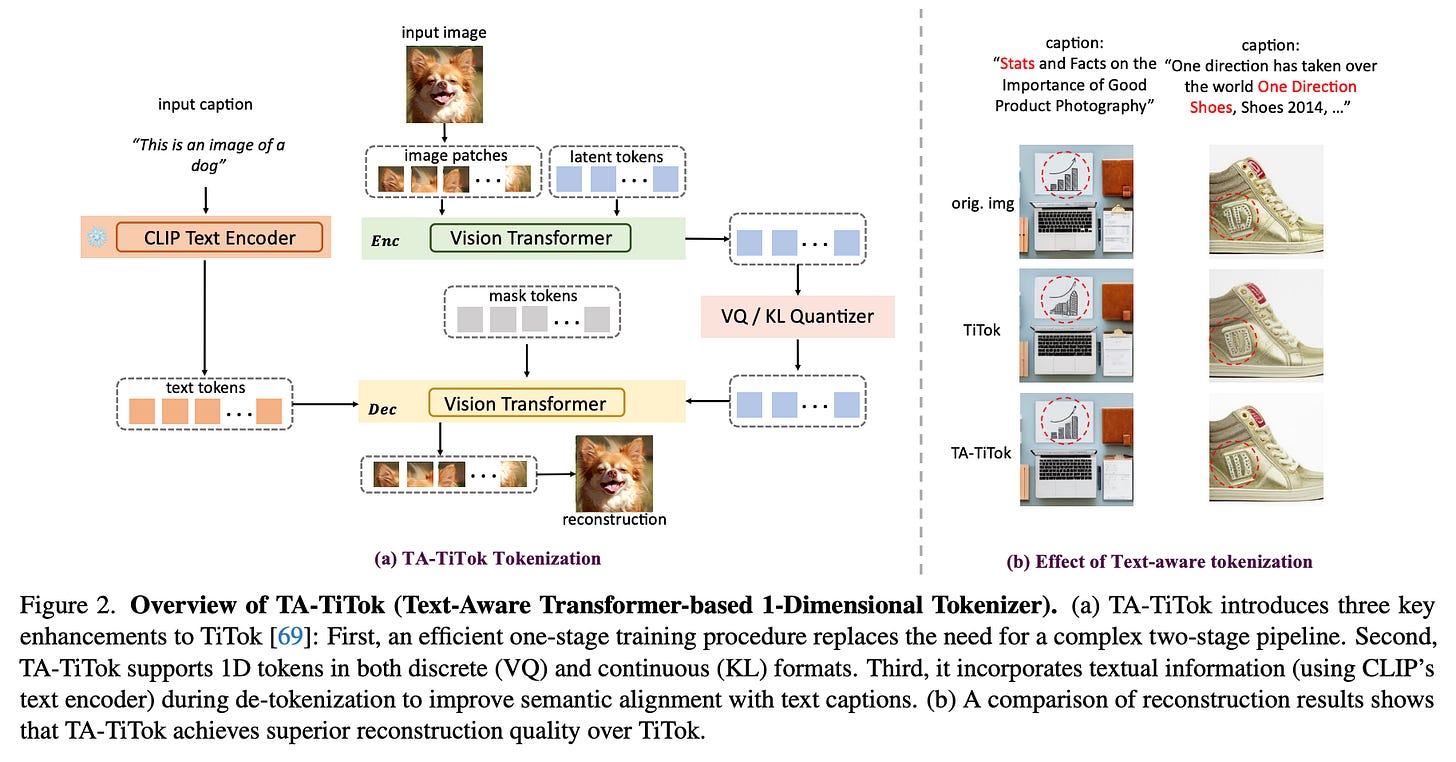

How TA-TiTok Solves the Problem

1. Simplified Training Pipeline

TA-TiTok introduces a one-stage training process, eliminating the complex two-stage pipeline of prior tokenizers (e.g., TiTok).

This simplification enables efficient and scalable training on large datasets without sacrificing performance.

2. Compact and Flexible 1D Tokens

Unlike traditional 2D grid-based tokenization, TA-TiTok uses compact 1D tokens that are not tied to fixed image patches.

Each token can represent any region in an image, reducing redundancy and increasing sampling efficiency.

3. Continuous VAE Representations

TA-TiTok supports continuous tokens via Variational Autoencoders (VAE), which improve image reconstruction quality by avoiding the quantization losses seen in discrete tokens (e.g., VQ-VAE).

4. Text Integration During Decoding

Textual information is incorporated during the de-tokenization (decoding) stage by concatenating CLIP embeddings of captions with the tokenized image representations.

This allows for better semantic alignment between text prompts and the generated images, ensuring high-level coherence.

5. Open Data and Lightweight Components

TA-TiTok and MaskGen are trained on publicly available datasets like DataComp, LAION, and CC12M, filtered and curated to ensure quality.

By using CLIP for text encoding instead of resource-heavy alternatives like T5-XXL, the approach becomes more computationally efficient.

Let’s Go More Deep Dive into it

Overview of TA-TiTok

TA-TiTok enhances the original TiTok tokenizer with:

Improved one-stage training.

Support for both discrete (VQ) and continuous (KL) tokens.

Text-aware de-tokenization to incorporate semantic alignment with text.

Overview of MaskGen

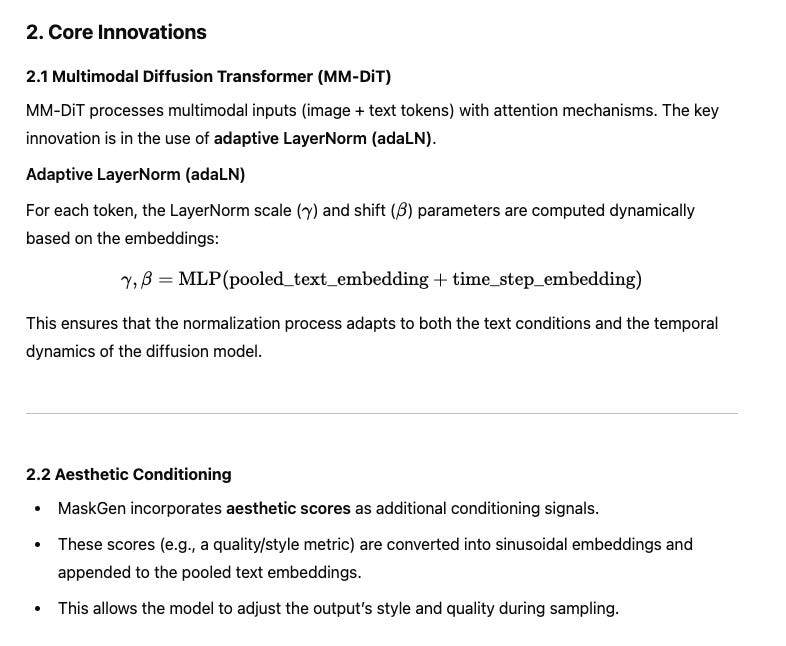

MaskGen is a text-to-image generative model that supports both discrete and continuous token representations from TA-TiTok. Key components include:

TA-TiTok for tokenization: Converts images into compact 1D latent tokens (discrete or continuous).

CLIP text encoder: Generates global and pooled text embeddings from captions.

Multimodal Diffusion Transformer (MM-DiT): Processes text and image tokens together using specialized attention mechanisms and adaptive LayerNorm layers.

Aesthetic conditioning: Adds an extra layer of control over the output quality and style.

Two training strategies:

Cross-entropy loss for discrete tokens.

Diffusion loss for continuous tokens.

Implementation Details

TA-TiTok Variants:

Three variants with K=32,64,128 latent tokens.

Uses a patch size f=16

VQ variant: Codebook with 8192 entries, 64-channel vectors.

KL variant: Continuous embeddings with 16 channels.

MaskGen Variants:

MaskGen-L: 568M parameters.

MaskGen-XL: 1.1B parameters.

Continuous token processing adds DiffLoss MLP:

44M parameters (MaskGen-L).

69M parameters (MaskGen-XL).

Token counts:

Discrete: 128 tokens.

Continuous: 32 tokens.

Datasets:

Training datasets: DataComp1B, CC12M, LAION-aesthetic, LAION-art, LAION-pop, JourneyDB, DALLE3-1M.

Filtering: Only images with longer side > 256 pixels and aesthetic scores > 5.0 for pretraining, > 6.0 for fine-tuning.

Enhanced captions using Molmo prompts.

Training:

TA-TiTok:

Batch size: 1024.

Steps: 650k.

Learning rate: 1×10−4, cosine schedule.

MaskGen:

Discrete tokens: Batch size 4096, 4×10−4 max LR.

Continuous tokens: Batch size 2048, 1×10−4 constant LR.

Masking rate: Random (0–1), cosine schedule.

Key Insights

1. One-Stage Training Recipe

An improved one-stage training recipe significantly improves performance, yielding a relative rFID↓ improvement of 2.72 on the ImageNet dataset compared to previous schemes.

Better optimization strategies help achieve better generative quality.

2. Text-Aware De-Tokenization (TA-TiTok) Impact

TA-TiTok consistently outperforms its non-text-aware counterpart (TiTok) across all configurations.

Continuous tokens (KL) perform better than discrete tokens (VQ) in terms of both rFID and IS metrics.

Example: For 128 tokens, TA-TiTok KL achieved rFID = 1.02 and IS = 209.7, while VQ had rFID = 2.63 and IS = 168.1.

Smaller token counts (e.g., 32) benefit more from text-awareness due to the challenge of capturing semantic details with fewer tokens.

3. Text Guidance Type

Using CLIP text embeddings instead of numerical IDs provides marginal improvements in performance:

rFID improves from 1.62 to 1.53, and IS improves from 213.6 to 222.0.

This suggests that richer text features improve alignment between text and image representations.

4. Token Count and Model Complexity

More tokens improve generation quality but increase computational cost:

For VQ MaskGen-L, increasing tokens from 32 to 128 reduced FID from 9.11 to 7.74 on MJHQ-30K but also slowed inference speed.

Training costs and inference throughput are higher for KL variants than for VQ:

KL excels in aesthetic and diversity-focused tasks, whereas VQ performs better for compositional accuracy (e.g., object count, position, and colors).

5. Aesthetic Score Conditioning

Incorporating aesthetic scores during training leads to more fine-grained and visually appealing generations.

Example: FID improves from 8.66 to 7.85 when aesthetic scores are used.

Higher aesthetic scores result in more detailed images (e.g., better rendering of stars, trees, and textures).

6. VQ vs. KL Performance

KL variants outperform VQ in FID and overall diversity metrics:

Example on MJHQ-30K: MaskGen-XL KL achieved FID = 6.53, whereas VQ achieved 7.51.

VQ, constrained by a finite codebook, performs better for compositional accuracy:

Example: On GenEval, VQ MaskGen-XL achieved Overall = 0.57, while KL achieved 0.55.

KL incurs higher computational demands due to its diffusion-based nature.

7. Text Guidance Placement

Text guidance in both the encoder and decoder of TA-TiTok was tested, but injecting text guidance only in the decoder proved most effective, aligning semantic generation more closely with textual descriptions.

8. Conditioning with Additional Signals

Experiments with various conditioning signals (e.g., object semantics, positions) enhance model control and improve specific dimensions of image quality (e.g., count, color, and attribute accuracy).