Centurio Paper Explained

[Daily Paper Review: 12-01-25] Centurio: On Drivers of Multilingual Ability of Large Vision-Language Model

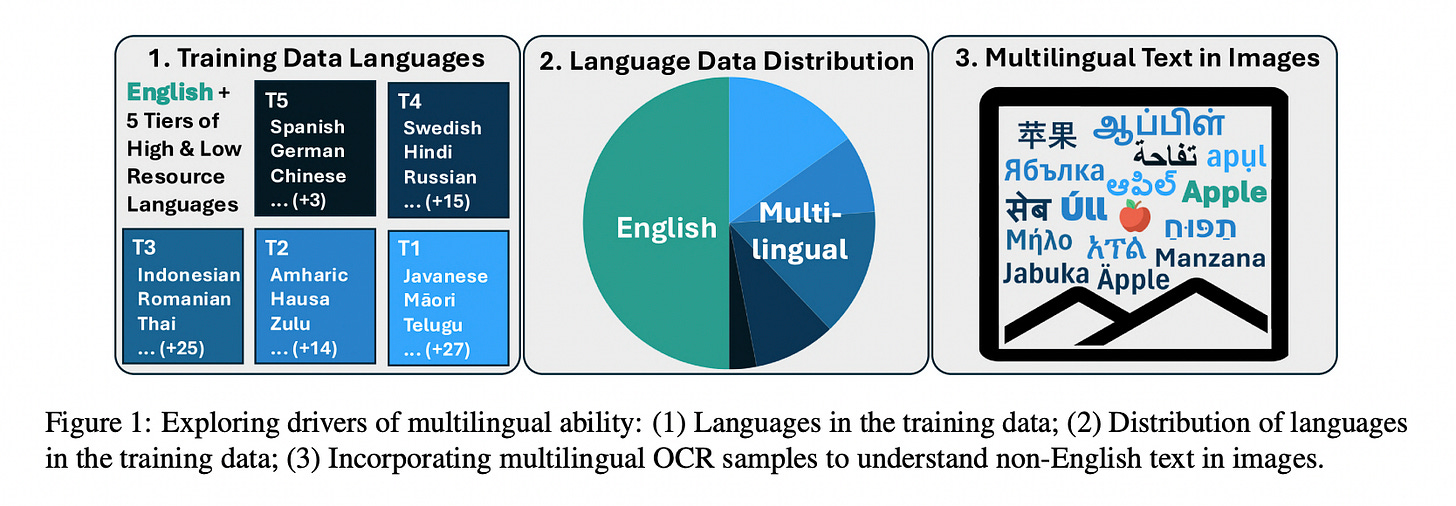

Introduction Multilingual learning in large vision-language models (LVLMs) has made substantial strides, particularly with the introduction of Centurio, a state-of-the-art multilingual LVLM trained on insights derived from four critical research questions. This paper delivers a comprehensive guide on optimizing multilingual training for LVLMs, particularly in supporting low-resource languages and handling multilingual OCR tasks.

Key Takeaways

Why Multilingual LVLMs Matter

Multilingual LVLMs empower global accessibility, supporting diverse languages across text-in-image tasks, natural image processing, and cross-lingual reasoning.

Centurio exemplifies the balance between high English performance and robust multilingual capabilities, outperforming existing models in many low-resource language tasks.

Research Questions (RQs) Explored

1. Number of Training Languages (RQ1)

Does training on a limited set of high-resource languages suffice, or is explicit inclusion of low-resource languages necessary?

Findings: Including more languages during training improves performance for all languages. Adding low-resource languages minimally impacts high-resource languages while enhancing multilingual fidelity.

Practical Takeaway: Explicit training on all target languages is feasible and beneficial.

2. Language Distribution in Instruction-Tuning (RQ2)

How much of the instruction-tuning data should be multilingual vs. English?

Findings: Peak performance is achieved when 25% to 75% of the data is in English. Low-resource languages benefit from a higher share of multilingual data, whereas high-resource languages prefer more English.

Practical Takeaway: A 50:50 split between English and multilingual data is optimal for balanced performance.

3. Language Distribution in Pre-Training (RQ3)

What is the ideal mix of English and multilingual data during pre-training?

Findings: Balanced pre-training (50% English, 50% multilingual) leads to the best multilingual performance. English-only pre-training benefits English tasks but has negligible effects on others.

Practical Takeaway: A multilingual mix during pre-training boosts low-resource language capabilities.

4. Improving Multilingual Text-in-Image Tasks (RQ4)

How can models improve on tasks requiring text recognition and grounding within images?

Findings: Synthetic multilingual OCR data significantly improves performance across all languages, especially non-Latin scripts. Fine-tuning the image encoder is critical for optimal results. However, gaps remain for non-Latin scripts due to limited training data.

Practical Takeaway: Large-scale multilingual synthetic OCR datasets and fine-tuning image encoders are essential.

In Depths of RQs Experiment

In the paper they divide the dataset into T1, T2, T3, T4, and T5 which refer to language tiers that categorize languages based on their resource availability for training. These tiers help structure the experiments by grouping languages with similar levels of available data and resources:

T5: High-resource languages. These are languages with abundant training data, including languages widely spoken or commonly used in technology (e.g., English, Chinese, Spanish).

T4 to T1: Medium- to low-resource languages. As the tier number decreases (T4 → T1), the amount of training data and resources available for these languages decreases. T1 represents the lowest-resource languages, often including those spoken by smaller populations or less commonly represented in multilingual datasets.

L100: This refers to a set of 100 languages used in the experiments, combining languages from all tiers (T5 to T1). This ensures that the experiments cover a broad spectrum of languages, from high-resource to low-resource ones.

1. Architecture

Model Framework: Based on the LLaVA architecture.

Image Encoder: SigLIP SO400/384 encodes images into visual tokens.

LLM: Uses Phi 3.5 (3.8B parameters) for strong multilingual capabilities with computational efficiency.

Token Processing: Visual tokens are projected into the LLM embedding space using a 2-layer MLP and concatenated with text tokens for input.

Generalizability Check: Subset experiments were repeated with Llama 3 (8B) to ensure findings are applicable to other LLMs.

2. Training Setup

Training Stages:

Pre-training: Focuses on image captioning with dense captions.

Instruction Tuning: Uses diverse vision-language tasks with public datasets.

Optimization Strategy:

Skip pre-training during initial language distribution analysis for cost-efficiency.

Conduct separate searches for optimal language distributions during pre-training and instruction tuning.

Parameter Updates:

The image encoder is frozen.

Only the MLP and LLM weights are updated using LoRA (Low-Rank Adaptation).

3. Training Data

Multilingual Data Acquisition:

Used machine-translated (MT) data due to the lack of sufficient high-quality multilingual datasets.

Translation was performed with the open NLLB model to translate English datasets into multiple languages.

Text-in-image datasets were excluded from translation to avoid mismatched text.

Data Sources:

Instruction tuning: Adapted from LLaVA-Next, containing 0.77M samples.

Pre-training: Used 1.3M dense captions from ShareGPT4v.

Limitations:

MT data quality may be lower, especially for low-resource languages, but findings suggest improvements with higher-quality translations.

4. Evaluation

Test Suite:

Covers 13 tasks across 43 languages.

Languages grouped into 5 tiers:

T5: High-resource languages (e.g., German, Chinese).

T1: Extremely low-resource languages (e.g., Maori, Telugu).

Task Types:

Discriminative Tasks: Binary or multiple-choice answers.

Generative Tasks: Models generate target-language outputs, evaluated for both task performance and language fidelity (maintaining the instruction language).

Performance Metrics:

Results are averaged across tasks and languages within tiers.

English performance is separately reported and excluded from T5.

RQ1: Number of Training Languages

This section explores whether training on a large number of languages is beneficial and whether it negatively impacts performance for individual languages. Key insights and findings are summarized below:

Research Question

Core Issue: Does training on a few high-resource languages suffice, leveraging cross-lingual transfer, or is it necessary to include all targeted languages explicitly?

Potential Trade-Off: Does increasing the number of training languages degrade performance due to smaller data allocation for each language?

Experimental Setup

Instruction Tuning Data Split:

50% in English (fixed for all experiments).

The remaining 50% is divided equally among N languages, with each language receiving 50%/N of the total data budget.

Incremental Language Addition:

Gradually increases the number of languages N from high-resource (T5) to extremely low-resource (T1).

Experimental setups:

T5 (N=6),

T5-T4 (N=24),

T5-T3 (N=52),

T5-T2 (N=69),

L100 (N=99), which includes T1 languages to reach a total of 99 languages.

Evaluation Metrics:

General task performance.

Language fidelity: Ability to produce output in the correct target language.

Results

Performance for Included Languages:

Including a language during training significantly improves its performance, especially for low-resource languages.

Language Fidelity: Gains are dramatic when a language is explicitly included during training.

More multilingual training improves fidelity even for languages not included in training.

Impact on Previously Included Languages:

Negligible Drawbacks: Adding new languages has minimal or no negative impact on the performance of previously included languages.

Steady Gains: Fidelity and task performance improve across all tiers, even for unseen languages, when training includes additional languages.

Multilingual Training Feasibility:

Training with many languages is computationally viable and beneficial without significant trade-offs.

RQ2: Language Distribution in Instruction-Tuning

Objective

This section examines the optimal balance between English and multilingual data in instruction-tuning. The aim is to determine if allocating more of the training budget to non-English languages enhances multilingual performance or if it introduces noise, offsetting the benefits.

Experimental Setup

Language Mix:

Experiments use the L100 setup, consisting of 100 languages.

English Proportion (E%): Varies the percentage of the data budget allocated to English from 1% to 90%.

The remaining 100−E% is split equally among the 99 non-English languages.

Configurations: Six setups were tested: E∈{1,10,25,50,75,90}

Results

Peak Performance:

Optimal results for all language tiers (T1–T5) occur when 25% to 75% of the data is in English.

E=50% provides the most balanced performance across all tiers.

Task-Specific Trends:

Multilingual Tasks (e.g., XM3600, MaXM, BINMC):

Benefit from more multilingual data.

Performance drops when E>75%

Higher-Resource Tasks (e.g., MTVQA, xGQA, MaRVL):

Perform better with more English data.

Slight drops observed when E<25%

Low-Resource Languages:

Gain substantially with increased multilingual data, especially at lower EEE values.

Tasks focusing on low-resource languages, like XM3600, see notable improvements.

Validation with Llama 3:

Trends are consistent with Phi 3.5.

Best results for:

T1 and T2 at E=10%

T5 and English at E=90%

E=50% overall for all tiers.

Key Insight:

E=50% is chosen as the optimal instruction-tuning mix, balancing performance across multilingual and high-resource tasks.

2.4 RQ3: Language Distribution in Pre-Training

Objective

Explore the impact of varying language distributions during pre-training (image-caption pair learning). The study investigates whether balancing English and multilingual portions leads to better performance compared to unbalanced distributions.

Experimental Setup

Instruction-Tuning Fixed:

Uses the L100 setup with E_IT=50% as the instruction-tuning distribution.

Pre-Training Mix:

Varies the proportion of English image-caption pairs E_PT in the pre-training data:

E_PT∈{100%,50%,1%}

Non-English data is evenly distributed across the other 99 languages.

Results

English-Only Pre-Training (E_PT=100%):

Benefits English tasks.

Negligible effect on performance for non-English languages.

Multilingual Pre-Training (E_PT=50%):

Substantial improvements across all language tiers, particularly for low-resource languages (T1 and T2).

Provides the most balanced multilingual performance.

Minimal English Pre-Training (E_PT=1%):

Does not degrade multilingual performance.

Does not improve results significantly compared to E_PT=50%

RQ4: Improving on Multilingual Text-in-Image Tasks

Objective

This section explores improving multilingual understanding of text-in-image tasks, which present unique challenges because text embedded in images is not easily translatable. The study evaluates the effectiveness of synthetic multilingual OCR data in enhancing performance across languages.

Evaluation Dataset

SMPQA (Synthetic Multilingual Plot QA):

A new multilingual evaluation dataset designed to test two key skills:

Text recognition: Reading and outputting text from images.

Text grounding: Linking prompt text to corresponding text in the image via yes/no questions (e.g., “Is the bar with label $Label the largest?”).

Coverage:

5 Latin-script languages (one from each language tier).

6 major non-Latin-script languages.

Experimental Setup

Synthetic Data Generation:

Based on the Synthdog approach (Kim et al., 2022).

Training Data:

500k synthetic text-in-image samples added during pre-training.

A subset of 50k samples included in instruction-tuning.

Language Distribution:

Proportion of English data E∈{100%,50%,1%}

Non-English data evenly distributed across 99 languages.

Latin-Down Setup:

Double the training budget for 32 non-Latin-script languages.

Halve the budget for Latin-script languages (excluding English).

Fine-Tuning:

The image encoder is unfrozen and fine-tuned to optimize performance.

Results

Baseline Models:

Models without pre-training or OCR training:

Perform well on English and Latin-script languages.

Fail on non-Latin scripts, with performance near random.

Impact of Pre-Training:

Adding the pre-training step improves multilingual performance compared to models trained only via instruction-tuning.

Likely due to image-text pairs in the pre-training data explicitly linking captions and text in images.

Effect of Synthetic Data:

English-Only OCR (100% English):

Substantially improves performance across all languages, even for non-Latin scripts.

Multilingual OCR Data:

Further enhances performance across scripts.

Does not degrade English performance, even when English constitutes only 1% of the training data.

Critical Role of Fine-Tuning:

Unfreezing and training the image encoder is essential for optimal performance across all languages and scripts.

Latin-Down Setup:

Skewing the training budget towards non-Latin scripts reduces the gap slightly.

However, the gap between Latin and non-Latin scripts remains significant.

Hypothesis: Much larger volumes of text-in-image training data for non-Latin scripts are necessary to achieve parity.

Key Findings

Scalability of Multilinguality:

The "curse of multilinguality" (trade-offs from including more languages) is largely absent.

Training with up to 100 languages significantly improves performance for newly added languages without degrading prior language performance.

Language Exposure vs. Data Share:

Language exposure is more important than allocating large proportions of training data to each language.

Including 25–50% multilingual data is optimal for most scenarios; beyond this, performance may saturate or degrade.

Text-in-Image Challenges:

Adding synthetic OCR data boosts performance for Latin-script languages but shows limited benefits for other scripts.

Practical Implementation:

Trained Centurio, a 100-language LVLM, using the findings to optimize data distribution across languages during training.

Centurio achieves state-of-the-art performance across 14 tasks and 56 languages, particularly excelling in low-resource languages compared to popular LVLMs like Qwen2-VL and InternVL 2.5.